Classifying Panda Behavior (Neural Networks)

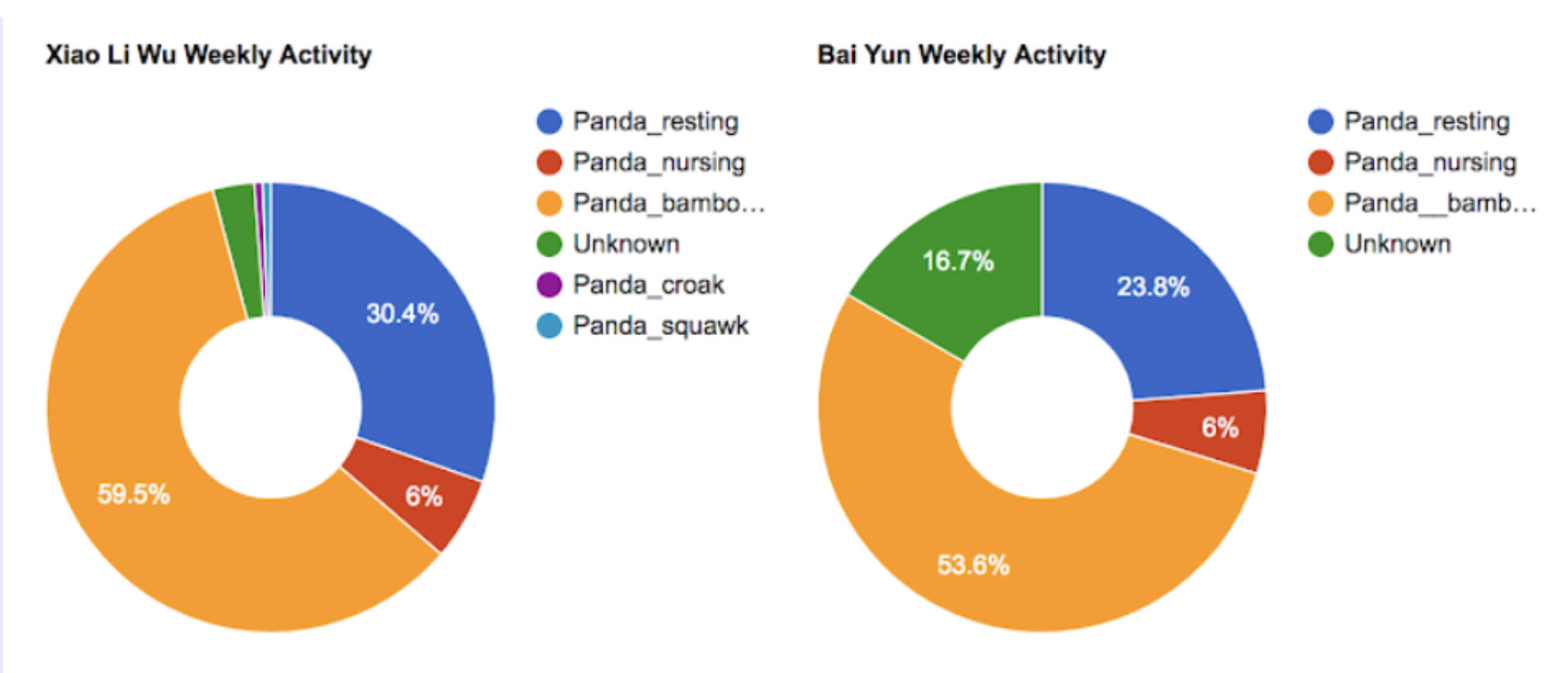

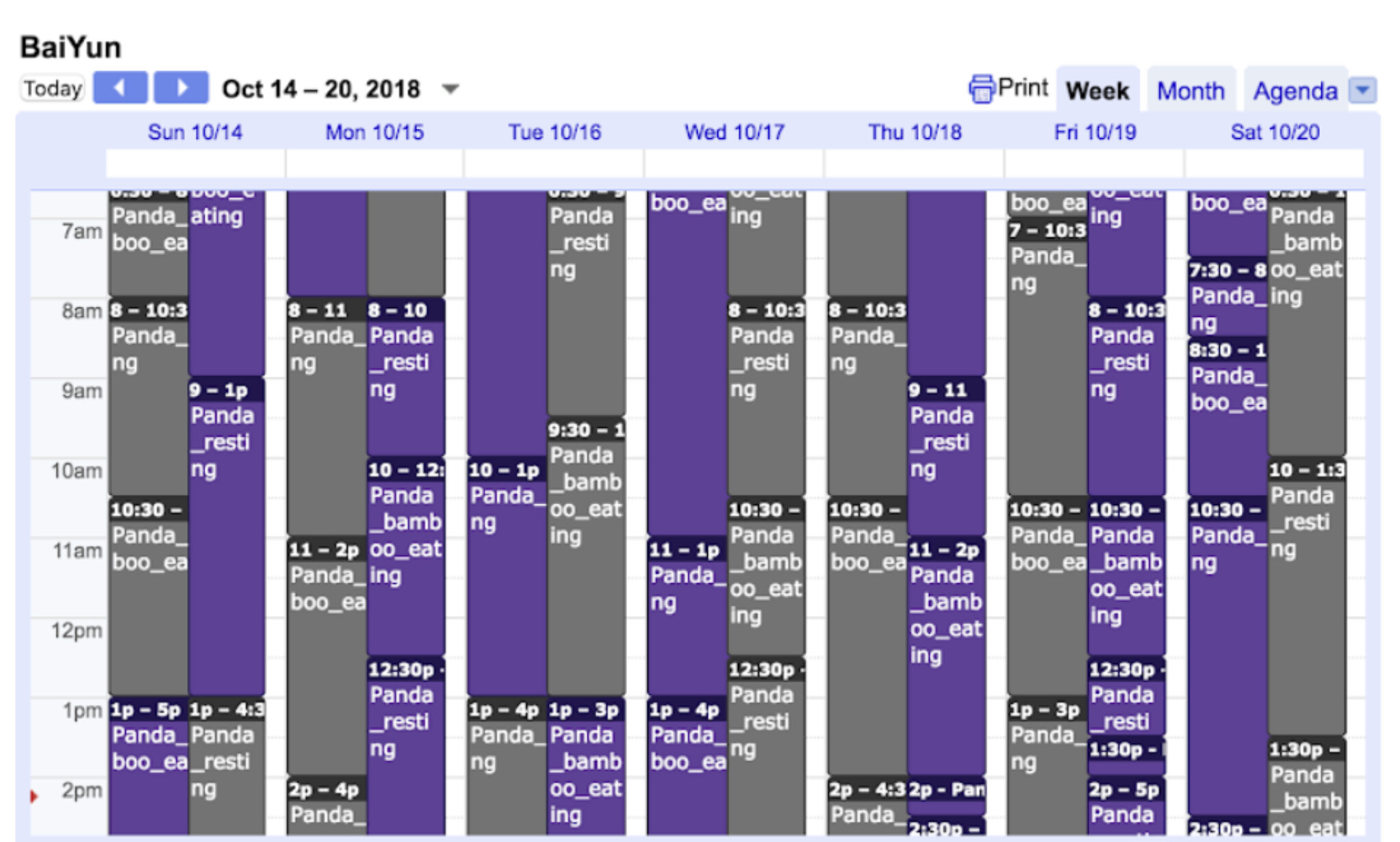

Using an open-source deep-learning approach, we demonstrate the successful real-time identification and classification of seven panda vocalizations, in addition to chewing and nursing sounds. Furthermore, we experimented with video skeleton tracking and motor behavior identification.

Understanding pandas is crucial to continued conservation success, yet our capacity to monitor their behavior in the wild using GPS and accelerometer data alone is limited—unable to distinguish, for example, from sleeping, eating, and nursing. Acoustic monitoring offers a promising approach to the detection and classification of many significant behaviors. In addition to better understanding pandas directly, sound analysis also allows detection and analysis of species nearby, including birds, insects, and humans, each of which impact panda behavior in unknown ways.

By recording and analyzing sound using neural networks, one can non-invasively map behaviors in single individuals over long periods of time, offering new insight into their life histories and energy budgets.

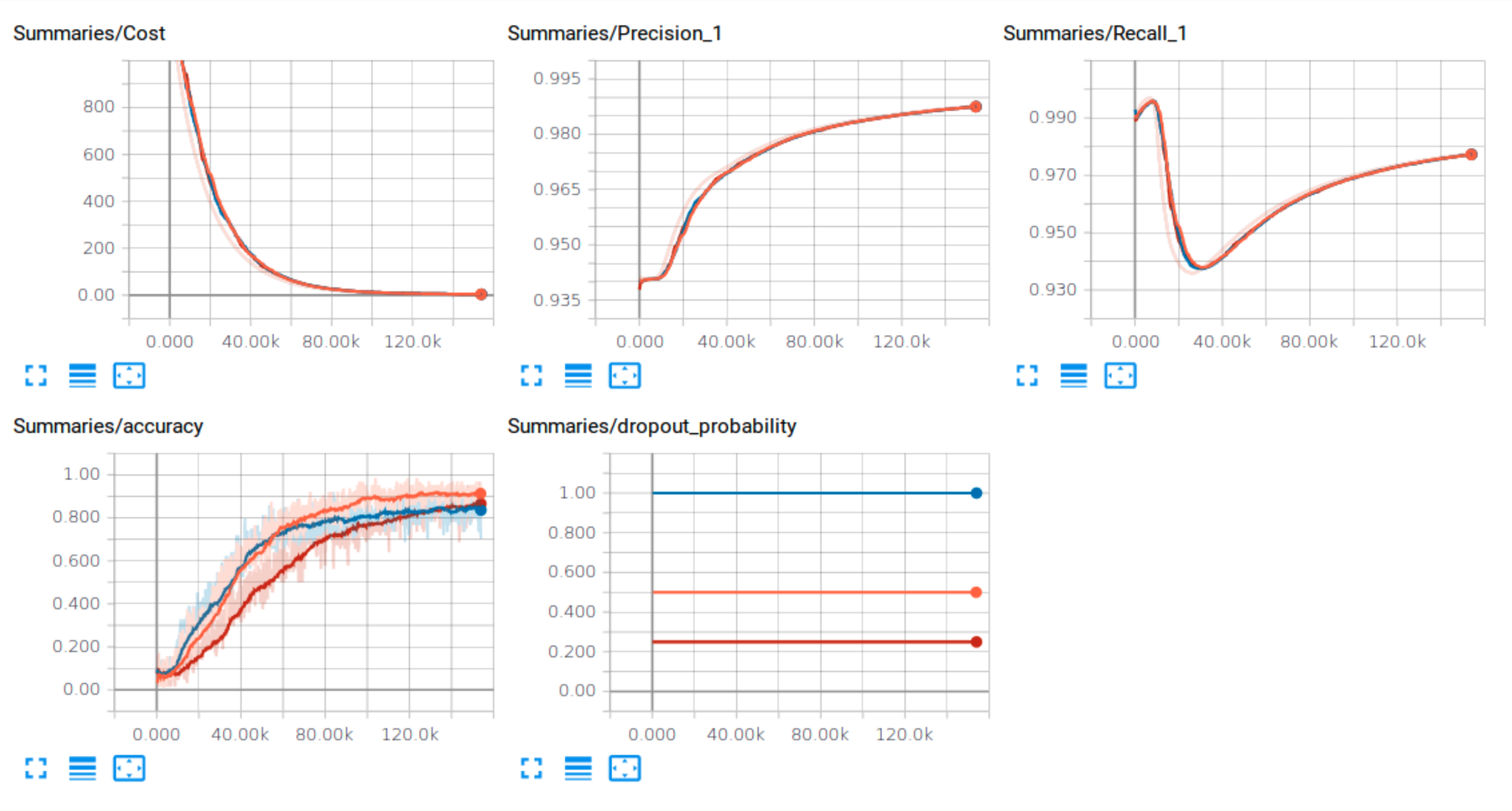

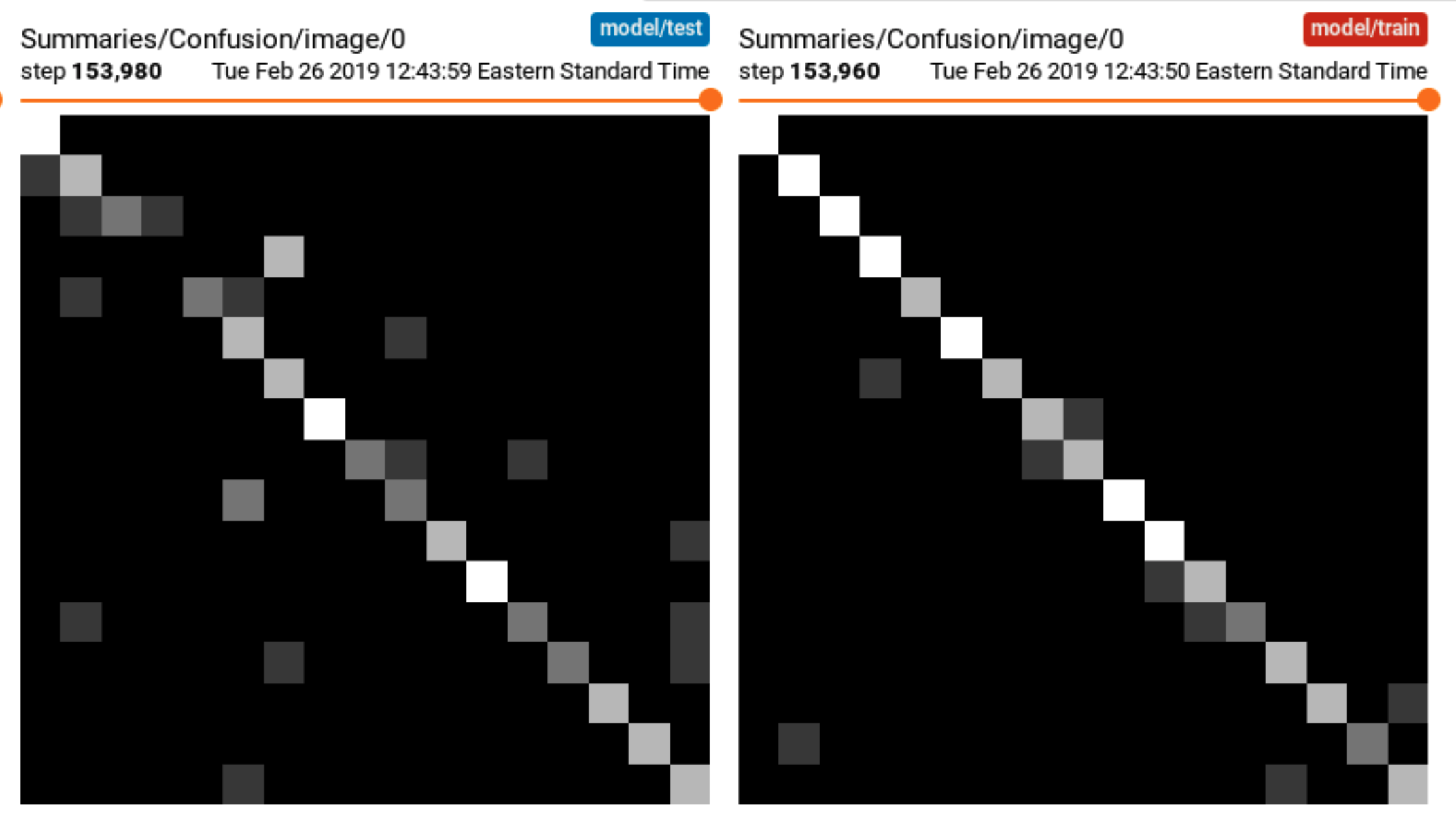

Trained using over 1500 human-classified field recordings from freely-behaving pandas in China, our AI produced correct classifications in 94% of test cases.

In addition, we were able to train a separate system to track pandas in videos and classify their movements (walking, climbing, etc). This system requires further testing and training.

with B. Charlton (SD Zoo), C. Duhart (MIT), M. Owen (SD Zoo), R. Kleinberger (MIT), J. Baker (MIT), J. Sands (MIT).

Sample audio clips used to train the panda acoustic classifier neural network. The clips here are (in order) a typical “bleat”, “chirp”, “honk”, “moan”, “noisy bark”, “roar/growl”, and “tonal bark”. Over 1500 sound clips were used altogether.